Cpvr

Very Active Member

- Joined

- Jun 14, 2024

- Messages

- 571

- Reaction score

- 282

IT’S

The argument that AI Mode and AI Overviews are “just SEO” is short-sighted at best and dangerously misinformed at worst.What this position gets wrong isn’t just technical nuance; it’s the complete misunderstanding of how these generative surfaces fundamentally differ from the retrieval paradigm that SEO was built on. The underlying assumption is that everything you’d do to show up in AI Mode is already covered by SEO best practices. But if that were true, the industry would already be embedding content at the passage level, running semantic similarity calculations against query vectors, and optimizing for citation likelihood across latent synthetic queries. The shocking lack of mainstream SEO tools that do any of that is a direct reflection of the fact that most of the SEO space is not doing what is required. Instead, our space is doing what it has always done, and sometimes it’s working.

SEO is a Discipline Without Boundaries

Part of the confusion stems from the fact that SEO has no fixed perimeter. It has absorbed, borrowed, and repurposed concepts from disciplines like performance engineering, information architecture, UX, analytics, and content strategy, often at Google’s prompting.

Structured data? Now SEO. Site speed? SEO. Entity modeling? SEO. And the list goes on.

In truth, if every team accounted for Google’s requirements in their own practice areas, SEO as a standalone discipline would not exist.

So what we call SEO today is more of a reactive scaffolding. It’s a temporary organizational response to Google’s structural influence on the web. And that scaffolding is now cracking under the weight of a fundamentally different paradigm: generative, reasoning-driven retrieval and the competition that has arisen on the back of it.

SEO is Not Optimizing for AI Mode

There is a profound disconnect between what’s technically required to succeed in generative IR and what the SEO industry currently does. Most SEO software still operates on sparse retrieval models (TF-IDF, BM25) rather than dense retrieval models (vector embeddings). We don’t have tools that parse and embed content passages. Our industry doesn’t widely analyze or cluster candidate documents in vector space. We don’t measure our content’s relevance across the synthetic query set that’s never visible to us. We don’t know how often we’re cited in these generative surfaces, how prominently, or what intent class triggered the citation. The major tools have recently begun sharing rankings data for AIOs, but miss out on the bulk of them because they track based on logged-out states.The only part that is “just SEO” is the fact that whatever is being done is being done incorrectly.

AI Mode introduces:

- Reasoning models that generate answers from multiple semantically-related documents.

- Fan-out queries that rewrite the search experience as a latent multi-query event.

- Passage-level retrieval instead of page-level indexing.

- Personalization through user embeddings, meaning every user sees something different, even for the same query in the same location.

- Zero-click behavior, where being cited matters more than being clicked.

So no, this is not just SEO. It’s what comes after SEO.

If we keep pretending the old tools and old mindsets are sufficient, we won’t just be invisible in AI Mode, we’ll be irrelevant.

That said, SEO has always struggled with the distinction between strategy and tactics, so it doesn’t surprise me that this is the reaction from so many folks. It’s also the type of reaction that suggests a certain level of cognitive dissonance is at play. Knowing how the technology works, I find it difficult to understand that position because the undeniable reality is that aspects of search are fundamentally different and much more difficult to manipulate.

Google’s Solving for Delphic Costs, Not Driving Traffic

We are no longer aligned with what Google is trying to accomplish. We want visibility and traffic. Google wants to help people meet their information needs and they look at traffic as a “necessary evil.”Watch the search section of the Google I/O 2025 keynote or read Liz Reid’s blog post on the same. It’s clear that they want to do the Googling for you.

On another panel, Liz explained how, historically, for a multi-part query, the user would have to search for each component query and stitch the information together themselves. This speaks to the same concepts that Andrei Broder highlights in his Delphic Costs paper on how the cognitive load for search is too high. Now, Google can pull from results from many queries and stitch together a robust and intelligent response for you.

Yes, the base level of the SEO work involved is still about being crawled, rendered, processed, indexed, ranked, and re-ranked. However, that’s just where things start for a surface like AI Mode. What’s different is that we don’t have much control over how we show up on the other side of the result.

Google’s AI Mode incorporates reasoning, personal context, and later may incorporate aspects of DeepSearch. These are all mechanisms that we don’t and likely won’t have visibility into that make search probabilistic. The SEO community currently does not have data to indicate performance, nor tooling to support our understanding of what to do. So, while we can build sites that are technically sound, create content, and build all the links, this is just one set of many inputs that go into a bigger mix and come out unrecognizable on the other side.

SEO currently does not have enough control to encourage rankings in a reasoning-driven environment. Reasoning means that Gemini is making a series of inferences based on the historical conversational context (memory) with the user. Then there’s the layer of personal context wherein Google will be pulling in data across the Google ecosystems, starting with Gmail, MCP, and A2A man this is a platform shift and much more external context will be considered. DeepSearch is effectively an expansion of the DeepResearch paradigm brought to the SERP, where hundreds of queries may be triggered and thousands of documents reviewed.

The Multimodal Future of Search

Another fundamental change is that AI Mode is also natively multimodal, which means that it can pull in video, audio, and their transcripts or imagery. There’s also the aspects of Multitask Unified Model (MUM) that underpin this, which can allow content in one language to be translated into another and used as part of the response. In other words, every response is a highly opaque matrixed event rather than the examination of a few hundred text documents based on deterministic factors.Historically, your competitive analysis compared text-to-text in the same language or video-to-video. Now you’re dealing with a highly dynamic set of inputs, and you may not have the ability to compete.

Google’s guidance is encouraging people to invest in more varied content formats at the same time that they are cutting people’s clicks by 34.5%. It will certainly be an uphill battle convincing organizations to commit these resources, especially when “non-commodity” content won’t have a long life span either. Google is bringing custom data visualization to the SERPbased on your data. I can’t imagine remixing your content on the fly with Veo and Imagen are far behind. That alone changes the complexion of what we’re able to strategically accomplish in the context of an organization.

The Current Model of SEO Does Not Support Where Things are Going

I went to sleep the first night of I/O thinking about how futile it will be to log in to much of the SEO software we subscribe to for AI Mode work. It’s pretty clear that, at some point, Google will make AI Mode the default, and much of the SEO community won’t know what to do.We are in a space where rankings have been highly personalized for twenty years, and still, the best we can do is rank tracking based on a hypothetical user who joined the web for the first time, and their first act is to search for your query. We’re operating on a system that has been semantic for at least ten years and hybrid for at least five, but the best we can do is lexical-based content optimization tools?

Siiiiigh…..there is a lot of work to be done.

Maybe James Cadwallader was Right After All

At SEO Week, James Cadwallader, co-founder and CEO of conversational search analytics platform Profound casually declared that “SEO will become an antiquated function.” He quickly couched that by saying that Agent Experience (AX) is something that SEOs are uniquely positioned to transition to.

Before he got there, he thoughtfully made his case, explaining that the original paradigm of the web was a two-sided marketplace and the advent of the agentic web upends the user-website interaction model. Poignantly, James concluded that the user doesn’t care where content comes from as long as they get viable answers.

So, while Google has historically warned us against marketing to bots, the new environment basically requires that we consider bots as a primary consumer because the bots are the interpreters of information for the end user. In other words, his thesis suggests that very soon users won’t see your website at all. Agents will tailor the information based on their understanding of the user and their reasoning against your message.

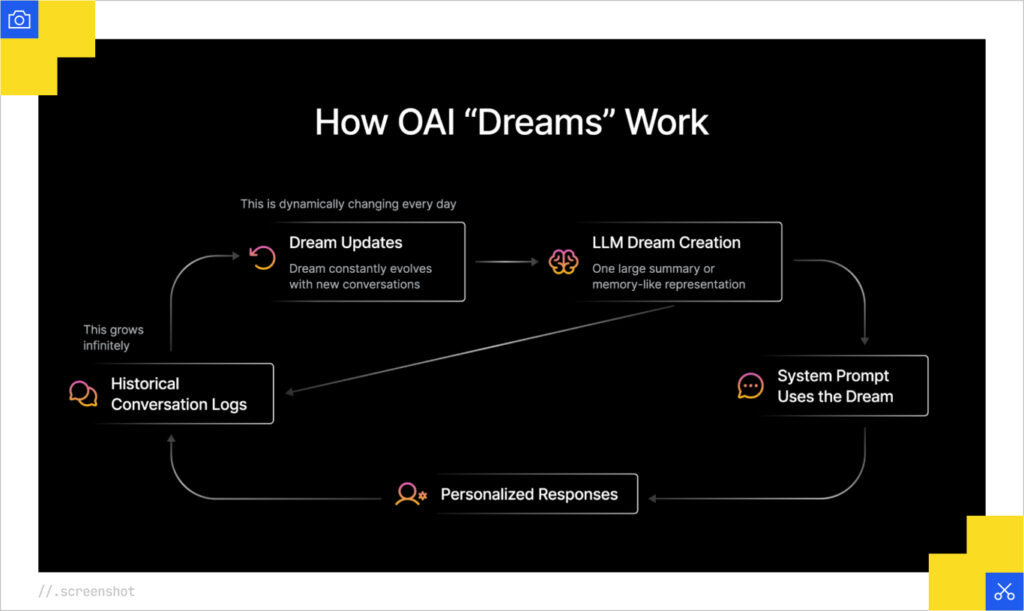

On the technical end, James talked through his team’s hypothesis on how long-term memory works. It sounds as though there’s a representation of all conversations that is constantly updated and added to the system prompt. Presumably, this is some sort of aggregated embedding or another version of the long-term memory store that further informs downstream conversations. As we’ll discuss a few hundred words from here, this aligns with the approach described in Google’s patents.

Initially, I thought his conclusions were a bit alarmist, albeit great positioning for their software. Nevertheless, one of the things that I love about Profound is that they are technologists and not beholden to the baggage of the SEO industry. They didn’t live through Florida, Panda, Penguin, or the industry uproar against Featured Snippets. They are clear-eyed consumers of what is and what will be. They operate in the way best-in-class tech companies do, so they are focused on the state of the art and shipping product quickly. Since the I/O keynote, I’ve come to recognize James is right, unless we do something!

James’s talk is more biased towards OpenAI’s offerings, but as we’ve seen, Google is going in an overlapping direction, so I definitely recommend checking it out to give context.

For more, read the rest of the article How AI Mode Works and How SEO Can Prepare for the Future of Search -